Failing to Make 1 Dollar

The goal was simple. I would make 1 dollar from an app that I made. It wouldn’t be enough money to retire on but it would be a good start. The plan was to finish the app in a few months. Unfortunately, it has been over a year and I have still not made that 1 dollar. I am writing this in the hopes of understanding my failure, go over the details of what I have built so far and finally figure out a way forward.

I had built a few personal projects over the years but this was the first time where I set out with the explicit goal of making money. It wasn’t that I was opposed to making money before but I regarded it as something that was maybe beneath me. I won’t go into why I had that belief, or why I think i believed it, just that I now believe that I was wrong. If I look at my fundamental desires right now, I would say that they are my own freedom, the well being of my family and sometimes a cheese burger. I have come to accept that not all of these desires will be satisfied working for someone else. It can offer me a comfortable life that is beyond what I imagined growing up. However there is a limit to what I can do to help take care of my family in the long term. It is one thing to send money here and there. It is another to help someone retire to a comfortable life. As a final note, I am trying to straddle a line in my writing where I do want to be useful to myself and to you the reader. I hope this mix of the personal and technical works for you.

The Project

I have always had an interest in movies. One of my previous failed projects had to with a little short film. Even on projects that were ostensibly unrelated to movies I would think about how I would present the project maybe in some sort of video. So it made sense to me to pick videos as the area where I would focus on. I expected to have to learn a ton of stuff so I figured that I might as well be in an area where I had some natural interest. I also wanted create a prototype quickly so I knew that I needed to pick a small project that I could finish in a month or two. I am writing this a year after I started working on the project so the early days of the project are bit hazy. Nevertheless I think it is worth it to talk about how given these constraints I made the brilliant decision to work on a video editor.

The initial idea was to do a simple video tool. A simple website that would take a video and return some information like a get the transcript maybe convert the video in another format, etc … There is a bunch of these websites online so I thought I could do that and at least learn the underlying tech that powers videos today. I made the first line of code for the video tool in May 2024. However with in a few weeks, OpenAI released GPT‑4o their first multi-modal model, which means a model that can process different modalities of data besides text like images and audio. This made my simple website for processing video data into an AI video editor. The idea was that you would upload a bunch of videos and tell it what kind of result you want to see and it would do the edit it for you.

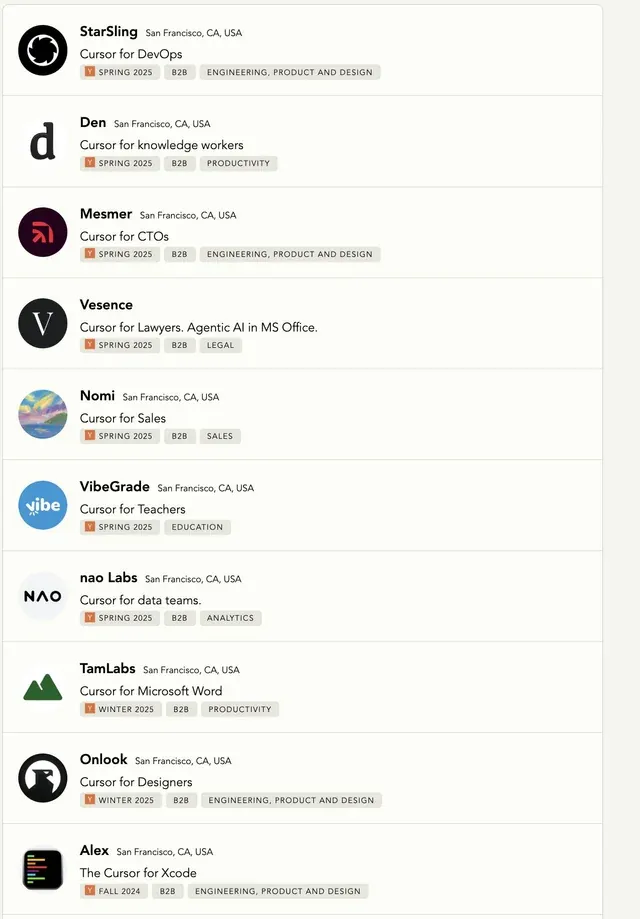

If you have the fortune/misfortune of following the tech scene, AI has driven a frenzy of new features and product. It is not hard to find a plethora of AI powered products that are not great but there are exceptions that I personally love. One example is Cursor, an AI powered code editor, that I have been using for my personal work. Cursor, which if you are not familiar, is basically a traditional code editor with an AI modal that has access to the context of your project. You chat to a model of your choice with a chat window to the side. It is hard to understate how much more useful this makes the AI models. There are of course limitations but I was amazed at how useful it could be and it continuous to improve as the product and models improve. I guess I was gripped with the mania and my idea mutated into Cursor for video editing. This was off course a genius idea that was not obvious to anyone else at all.

As this was my first business, my ambitions were much simpler than a venture backed startup. Just making something that was good enough for people to pay a monthly subscription. First get that 1 dollar and then grow it as much as I could, which is easier said than done. I had/have my engineer brain so I was mostly concerned with making the prototype and thought little about the market or competition which was probably not a wise thing to do.

Videos for dummies (from a dummy)

Before I discuss my efforts to build a video editor, let me step back and explain some fundamental things about video files. Video files are just a bunch of data or to be more exact a container for a bunch of data. There are two processes to consider when it comes to this data, the first is the process of recording the data and the other is playing that data back. Let us go over, at a high level, the process of recording a video on your phone and playing it back.

Let us say you are pointing your phone at some fireworks because it is July 4th and you are a pyromaniac and this the one day of the year you get to indulge your fetish. As the fireworks explode in brilliant color and violent sounds, light and sound waves propagate outward. These physical waves reach the sensors on your phone. These would be a 2 dimensional grid for video and a 1 dimensional membrane (diaphragm) for audio. The energy from these waves is converted into numbers. The sensors do this by generating an electric signal. For example, the audio sensor generates a rapid stream of numbers that records the microphone’s voltage as it rises and falls with the air pressure changes of the sound wave pushing on its diaphragm. For video, the sensor notes how much red, green and blue light hits each spot in the grid and combines neighboring readings into pixels. The electric signals are generated at a certain rate. For video it would a be certain frames per second and for audio it would be samples per second (hertz). This raw sensor numbers/data then goes through some processing to convert it to commonly used formats. For example, the raw video sensor data which tends be a 2d grid of Red, Green and Blue (RGB) Values gets converted into a format YUV, which separates brightness from color information, that is more suited to video. For audio, we use the pulse-code modulation (PCM), which is just the stream of voltage changes mentioned before.

Usually this raw data is captured at high precision so there is a reduction to a less precision format, referred to as quantization. Some data might be discard if it is beyond the limits of human perception, for example discarding inaudible sound frequencies and very subtle color differences. There is also compression which aims to minimize the memory taken by the data while minimizing perceptible artifacts. The coder/decoder (codec) used depends on the type of data. Some examples would H.264, AV1 for video and AAC, Opus for audio. Another important point is that some data, like video, can be redundant. How would you compress the data for your riveting fireworks masterpiece? You could just compress each frame individually. But if you are just pointing at the sky and most frames look very similar. Another approach would be to store a complete frame at the start of each scene and for each subsequent frame of that scene just store the differences. Because a lot of the data for each scene can be redundant. The frames that serve as anchor points for the compression are called keyframes (intra frame) and the frames storing the differences are called predictive frames (P frames). There is also bidirectional Frames (B frames) which use data from frames before and after them. Finally you have these compressed streams of video and audio data and you need a container for them. A video file is just that, it is a container format (think MP4) for these stream of compressed data. This process is called muxing. Hurray we have a video file on our phones!

Now let us say you have a friend that is as much a pyromaniac as you are and you decide to email them your fire works footage. When they open the file on their computer, their video player figures out the container format and demuxes (separates the streams) in the video file. After finding the streams, it decodes the video and audio streams piece by piece. These decoded pieces of data are processed so that they are suitable to the output devices on their computer such as displays or speakers. For example, the decoded frames in YUV format are converted into RGB values which is then used by the display to emit light. For audio, the decoded PCM values is converted into voltages which is then amplified and used by the speaker to move air which we experience as sound. And like that we finished the roundtrip of getting some recording which captures some energy in the world, stores that data and then uses that data to reproduces that energy, to a reasonable degree, at another point in space and time.

Finally a video editor, is a program that transforms the data in these files whichever way we see fit and then writes back out to a video file. For example if your comrade wanted to create an edit of your footage set to the dance track Firestarter by the Prodigy. When your friend imports the file in to their editor of choice, similar to the video player, it finds the video and audio streams. However instead of decoding the compressed data streams into a raw format for display, video editors often transcode from one compressed format into another format which is more lightweight for playback when editing. These are called proxies. The video editor collects data that it needs to make seeking quick. For example, keyframes for video streams. Your friend then creates clips from the imported files and arranges them in a timeline. It is important to note that the video editor does not create copies. It is just creating a recipe. A clip is not a copy of a portion of a video file but just a reference to the source file with the time range we care about. This is true for all the transformations that rearrange or cut the video file. There are other transformations such as Effects which are mathematical manipulations of the underlying data. If your friend for example wanted to digital zoom into your certain segment of your footage. The editor would decode the relevant frames apply the mathematical transformation to bring the frame closer and their either display if previewing or encode it if exporting to a file. Once your friend has arranged their masterpiece with the appropriate cuts, zooms and effects, they can export “july_4th_fire_edit_final_v5.mp4” which writes their work into an new video file.

Video Editor v1

The first version of the editor was made with the hope of just moving quickly as possible to get a prototype and then ship it. The high level plan for the user experience of using the app would be something like this. The user creates a project and adds their video files. The user asks for an edit through a chat interface. The AI looks at the video files and creates an edit. The user would then preview the edit and provide feedback in the chat. The model would then create a different edit. This would loop until the user was happy and export to a video file.

My previous projects were usually built around some shiny new thing, so I decided to more conservative in the hopes of being more focused. I picked the most popular set of technologies that I could find.For context, I work as a programmer at my day job but not in web development so I did have quite a bit to learn. If you are in unfamiliar with programming for the web, the main thing you need to understand is that you have think in terms of (two computers)[https://overreacted.io/react-for-two-computers/]. The first is the user’s computer (the client) which requests data from you the programmer’s computer (the server) through the internet. The server then responds with the data. It doesn’t literally have to be your computer. Most people rent their servers but the basic idea holds. I was starting out so I felt better farming out fundamental pieces of the app to companies that had specialized in them. This meant using a framework Next.js which made it easy to have a website up and running using the framework author’s hosting service, Vercel. I also used Supabase, a service which offers a lot of what you need to build a traditional webapp stuff like a database and file storage. In retrospect, this would have been a reasonable stack if I was building a more traditional web app where you are mostly hitting a database for some information and showing it to the user. However I wanted to force my self to do in this project was to pick the most common tools that I could so that I could just get to the work of building the thing. This was actually a good thing for me.

The initial implementation that I settled on was performing as much of the video processing on the user machine as possible. The video processing would be done using FFmpeg, the defacto standard for video processing. One of the earliest questions that I was faced with the video editor was is where to do the majority of the video processing. Should I do it on the users computer or on the server? My initial instinct was to do it on the users computer which would be possible using ffmpeg.wasm, a version of ffmpeg that can run on the user’s computer. I also decided to store the user’s files on the users machine using a local database that comes with browsers called IndexedDB. The main reason was that I wanted the user to get started immediately using the app. I would figure out how to sync to the cloud for the video files later which was quite naive but I didn’t think too deeply about it. I would later learn about the local first movement which was focused on local ownership of data while allowing for syncing and collaboration over the internet.

For video processing, my initial expectation was that I would just send the video to openai and then have the model call ffmpeg to create an edit. However at least at the time you could not send videos to GPT-4o but you could send images. Google’s Gemini api did accept video where it samples it to 1fps but there are limitations on size and they only store files temporarily. To overcome the limitations on video data. I decided to sample frames the video files and the frames to GPT-4o. This system kind of worked but model would call functions to extract frames using ffmpeg.wasm and then send frames to the model so it can see what is in the video. However it had severe limitations. It was so slow that it was not useful. Looking back, I could have looked into ways of indexing the video data so that the model didn’t have to extract the frame each time it want to see the video but I was rushing and too ignorant to research how other people solved similar problems.

Discouraged by the unusable demo that I had made, I started to wonder if I should just bite the bullet and upload the video to a server and handle the processing there and then stream data over to the user. This would make things more predictable. I also decided that if I was committed to making something like an online video editor, I wound need to move beyond off the shelf solutions.

Video Editor v2

The second version aimed to deliver a similar experience as the first version, with the main difference being that the user would upload files to our server which will deal with the processing. Moving beyond off the shelf solutions meant learning what these solutions were protecting you from. Dealing with either your own hardware or more realistically a provider like Amazon Web Services (AWS). The fact that AWS was complex enough that you could make businesses worth millions/billions of dollars hiding that complexity didn’t make me excited to deal with it. However, AWS, as a pioneer in the space, has a rich ecosystem around it. You could find a wide range of articles, videos and documentation to help you. Furthermore the AI models were pretty familiar with it. The best way to describe AWS is as a monster that has many faces to many different types of people. After using AWS, I came to appreciate the services provided by companies like Vercel and Supabase.

Even though I was aiming to make a more custom application, I still tried to stay with boring, tried and true technologies. For the UI, I picked React, the predominant UI library with Vite, the defacto standard build tool for Javascript. The server would be an Express application with tRPC which would ensure we could get typed data from the server to the client. The client and server would each be in their own docker containers. Containers, if you are not familiar, will allow you store all the dependencies for your project including the operating system. This means that you can switch machines easily or even share machines which is cheaper. These containers would then be deployed to AWS’s container service. The data for the app would be hosted on a PostgreSQL database hosted on AWS’s Relational Database Service (RDS). The files would be hosted on AWS’s storage service (S3). Like so many things, uploading and downloading files is a can of worms. I got my first surprise when I wrote code to upload the file straight to my server which apparently is not standard practice. AWS charges you for bandwidth which apparently doesn’t cost them that much. I think there are other alternative hosting providers that don’t do this but I don’t have desire to move infra. This helped me appreciate the hatred that AWS inspires. There are a bunch of things like this in AWS where most things will be reasonable priced or even cheap but there will be this one features which is so obscenely priced, it is shocking. Anyway the “right” way to do it is to send the user a unique link so that they can upload their files to S3 and then your server downloads the file from S3 which makes things slower.

I have mentioned quite a bit of infrastructure which would be a nightmare to manage by hand but I learned that it was possible to manage all this infrastructure as code. By using these Infrastructure as Code (IaC) tools, you can manage the machines and services that make up your webapp. I tried a few different tools for this including AWS’s IaC tools but settled on Terraform which was more reliable. For video processing, I initial started with just programmatically issuing ffmpeg commands but this was brittle and the more complex the pipeline became the more brittle it became. I eventually found it painful enough to just write a video processing library that links to the ffmpeg c libraries. This was not as bad as I thought it would be. One thing that you have to be careful of is what part of ffmpeg code you link to in your project. Ffmpeg code is licensed either as LGPL (don’t have to open source your code) and GPL (have to open source your code). Fortunately you can build your own version of ffmpeg where you can disable anything that is not LGPL. You do lose access the encoder for one of the main codecs, H.264, but hardware manufactures usually have their own encoders that you can use. If that fails you can use encoders like OpenH264(https://github.com/cisco/openh264) which don’t have better licenses.

Overall the theme of this second version was directly working with the technology that was important to the success of the project. Now you might be wondering why have I not shipped this version then? The main issue is that processing in the server has a major disadvantage which is that it is slow to upload and download files. This was a disadvantage that I was aware of but I didn’t feel how deeply it would compromise my own experience of using my own editor. As a user, I didn’t even want to use my own project. I felt like that was a bad sign. I realized that I needed to change directions again. This was a painful realization of course and one that I avoided thinking about for a few months. At this point I am a year into the project with nothing to show for it. I decided to make an even more cut down version of the project. I think the core idea is an AI powered video editor and adding the online part was causing to much of a complication. As a result, my next steps are going to be to make a native app that I am excited to use. I want it be fast and allow me to make videos way in a way that is very customizable. I will dig into that what that means in a future post when I launch it.

Do I want what I say I want?

To be honest, I feel embarrassment writing this out. I have many conflicting thoughts about what has happened. Part of me feels like I could have avoided a lot of this by being more thoughtful or doing more research into what others are doing. Another part screams that I should just stop being such an engineer. That business is a much wider domain than engineering and I should shift my focus onto other aspects of the business. Another wonders if I can sustain working on something long term if I am not proud of it. A final part thinks that maybe the embarrassment that I feel is exacerbated by the information that I have now on how to do things better. That as long as I learn my lesson and course correct faster in the future I will be ok.

The struggle to achieve what I hoped would be a relatively modest goal of making a dollar has me questioning if I even want what I say I want.